Building a Hand Tracking Interface

Dev Narang

August 2024

Building a Hand Tracking Interface

Theres a scence in the Iron Man movie where Tony Stark effortlessly manipulates holographic interfaces with just a wave of his hand? The way he could move, resize, and interact with digital objects in mid-air seemed like pure science fiction. And, I wanted to make a part of that for a long time.

Try the interactive demo here →

The Inspiration

Growing up watching Iron Man, I was always fascinated by the seamless interaction between humans and technology. The idea of controlling digital interfaces with natural hand movements felt like the future of computing. Instead of being confined to keyboards and mice, what if we could interact with computers the way we interact with the physical world?

This curiosity led me down a rabbit hole of computer vision and machine learning, eventually making the hand tracking system that could detect and track hand movements in real-time. But I didn't stop there – I wanted to make it practical and useful.

The Setup: Camera, Projector, and a Mat

My vision was simple: create an interactive surface where hand gestures could control digital content. I set up a camera above a gray holomat, and connected it to a projector.

How it works is the camera captures hand movements over the holomat, the computer processes these movements in real-time, and the projector displays the interactive content back onto the same surface. It's like having a digital canvas that responds to your touch, except you don't even need to touch it.

How Hand Tracking Actually Works

Hand tracking is just about teaching a computer to "see" and understand human hands. Here's how it works in simple terms:

Machine Learning: Pre-trained models help the system recognize hand landmarks and gestures with high accuracy.

Detection: The system first identifies that there's a hand in the camera's view.

Landmark Identification: Once a hand is detected, the system identifies key points on the hand – like the tips of fingers, joints, and the palm.

Tracking: The system continuously monitors how these landmarks move, creating a real-time map of your hand's position and gestures.

Computer Vision: The system uses advanced algorithms to process video frames and identify hand shapes and movements.

Real-World Applications

Once I had the basic hand tracking working, the possibilities seemed endless:

Gaming

I created simple space invaders games where hand gestures controlled characters or objects. Touch your thumb and pointer finger to shoot and just move your hand left and right to move it.

Measurement Tool

I also made a digital ruler. I could place objects on the holomat and use hand gestures to measure distances and angles with milimeter precise measurments.

Drawing and Design

The system became a digital canvas where I could draw and sketch using only hand movements. The precision wasn't perfect, but the natural interaction made the creative process feel more intuitive.

Virtual Control Panel

I created virtual buttons and sliders that responded to hand gestures. Want to adjust volume? Just swipe your hand. Need to change settings? Point and gesture. It was like having a touchscreen that didn't require a screen.

Challenges and Solutions

Building this system wasn't without its challenges. Lighting conditions affected accuracy, sudden hand movments caused tracking issues, and ensuring smooth, responsive interactions required optimization. The key was finding the right balance between accuracy and performance.

The Future of Natural Interfaces

This project opened my eyes to the potential of natural user interfaces. While my implementation was relatively simple, it demonstrated that the technology exists to create more intuitive ways of interacting with computers.

Here were some more of my ideas:

- Imagine architects could manipulate 3D building models with hand gestures

- Or Surgeons could control medical equipment without touching anything

Conclusion

What started as a childhood fascination with Iron Man's futuristic interface became a real-world project that taught me about the use of computer vision and practical problem-solving.

The technology isn't perfect yet, but it's getting closer to that seamless interaction we saw in the movies. Every gesture, every movement, every interaction brings us one step closer to a future where technology adapts to us.

The future of computing isn't just about faster processors or better screens – it's about creating interfaces that feel natural, intuitive, and magical.

This project represents just the beginning of what's possible with hand tracking technology. As computer vision and machine learning continue to advance, we're getting closer to making Tony Stark's interface a reality for everyone.

Dev Narang

Web developer and tech enthusiast sharing knowledge and experiences.

Comments (0)

Related Posts

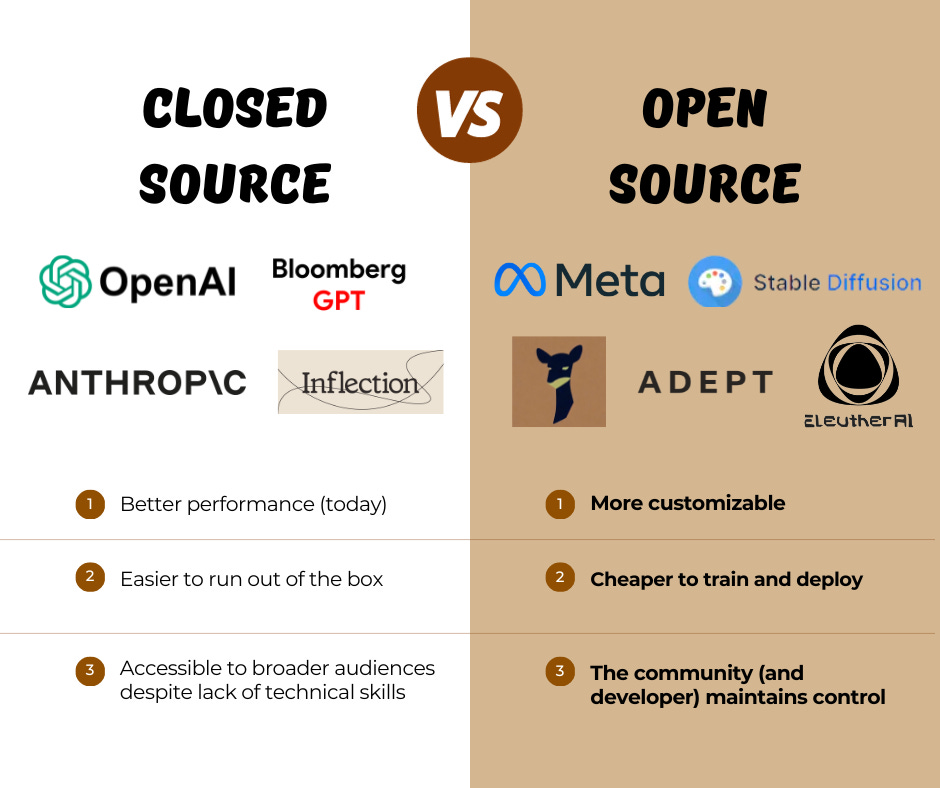

The 'Brain' You Own vs. The 'Brain' You Rent: Why Open Source Wins the AI Business War

OpenAI sells a fish; Meta gives you the genetics to breed your own. Why open-source AI models provide the customization, privacy, and control required to build a defensible business moat.

Building a Voice-Controlled 3D Design Assistant with MCP

From voice commands to projected 3D designs - how we used Model Context Protocol to create an agentic AI system that turns spoken language into interactive 3D scenes.